Activities

1. Start with an architectural draft

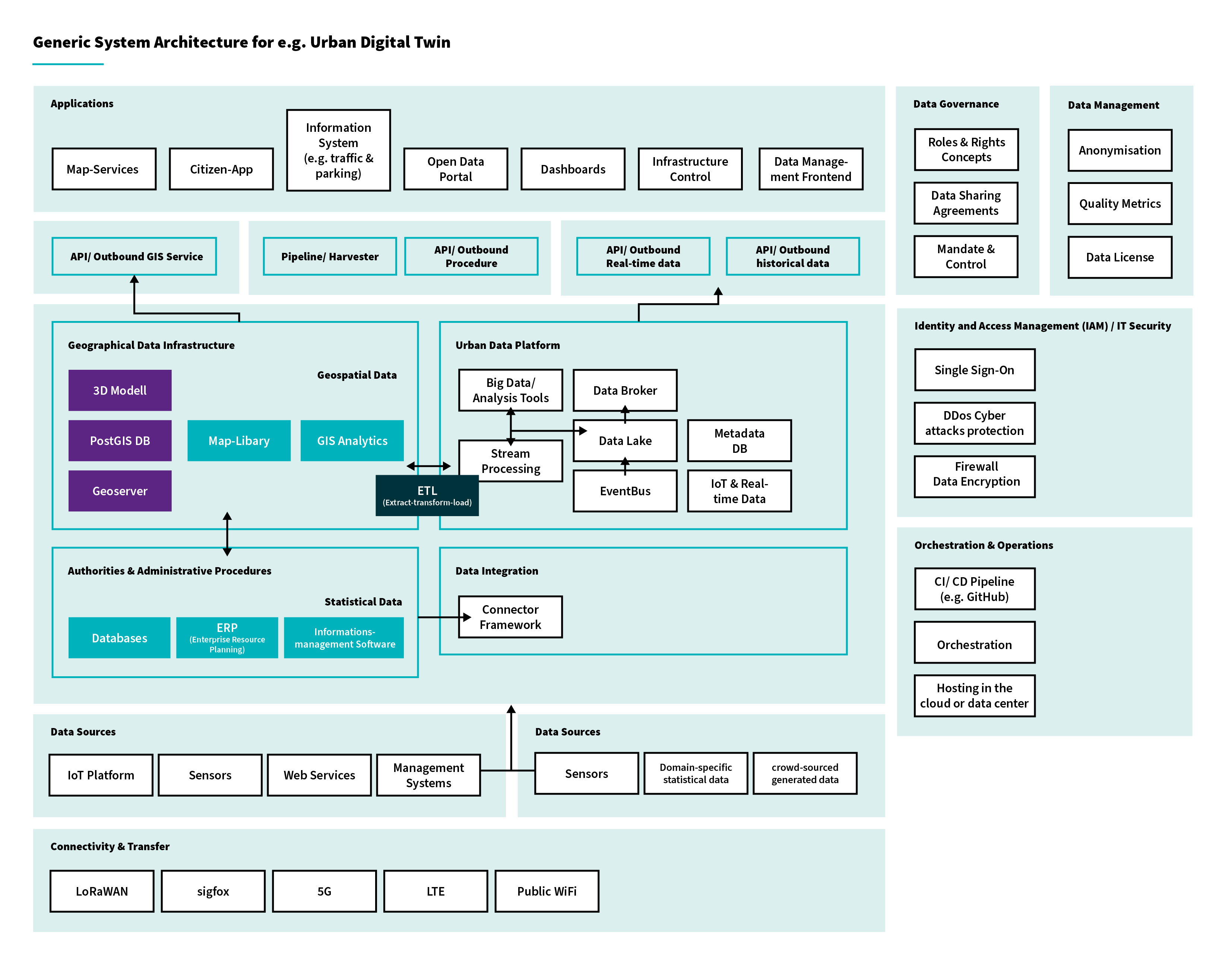

In this component, it is essential to define and align the overall system architecture for your data-driven use case. This involves identifying the different technical components involved, how they interact, and what authorisations, adjustments, or interfaces may be needed within existing infrastructure.

The goal is to ensure that your architecture supports data collection, storage, processing, analysis, and visualisation, while complying with legal, operational, and cybersecurity requirements.

Components to consider in the architecture:

- GDI: Define how your architecture will integrate with the municipal or national GDI.

- UDP: Assess how your use case connects to the existing Urban Data Platform. Did you identify all the data pipelines, APIs, and connectors? Compatibility with metadata catalogues?

- Applications: ensuring the connection of dashboards, mobile apps, public portals, internal tools to the backend.

2. Select technology

Once you have a clearer picture of your required system architecture, you can start selecting the platforms, tools, and frameworks that are still missing (e.g. cloud services, AI tools, GIS mapping software, etc.).

At this stage, it’s crucial to evaluate each new tool or platform carefully:

- How interoperable is it with your existing systems?

- Who holds data sovereignty?

- What is the maintenance and support model?

- What are the costs for implementation, long-term service and upkeep?

Keep in mind that even Open Source tools can lead to high maintenance costs if these aspects are not clearly understood and planned from the beginning.

3. Ensure data integration

If you’re starting know the data integration, you have made sure that your data complies with legal and privacy regulations. Your data is if necessary anonymised, pseudonymised and aggregated. Your datasets are standardised and include complete metadata (e.g. source, update frequency, licensing, schema). They have harmonised formats (e.g. time formats, units, geospatial references), which is crucial to ensure consistent coordinate systems and spatial granularity.

This solution offers a practical, ready-to-use framework that helps municipalities turn scattered, underused data into a well-governed, interoperable, and innovation-driving asset, making it a must-see for anyone working in public-sector digital transformation.

Semantic interoperability is especially important when merging datasets from different municipal departments or external partners.

You’ve also clarified the ownership and usage rights of your data. Typically, you’ll be working with open data, shared data, or shared analysis results. Data intermediaries and intermediation services can support you in preparing or acquiring the needed datasets.

As part of integration, resolve any open questions related to cloud services and cybersecurity. Depending on your use case, you may have specific requirements for storage capacity, especially for live data. Decide early on what kind of data archiving system and concept you need to store, access, and evaluate collected data.

This solution introduces a practical metadata schema that tackles the toughest technical, organisational, ethical, and regulatory barriers to smart-city interoperability, making it an essential resource for anyone trying to seamlessly connect heterogeneous data sources.

In this phase, open APIs are essential for integrating data from different systems. You should integrate not only real-time data (e.g. from your own scanners), but also historicised data, which can be valuable for forecasts and analysis.

ETL pipelines (Extract, Transform, Load) help you manage how data flows into your platform. Consider tools and existing workflows to automate this process including error handling and logging.

4. Develop a MVP (Minimum Viable Product)

During the development you will dive in the data integration and will have to tackle various problems. It is therefore important to also imagine the simplest version of the product that you want and need to build to answer your initial problem. For your MVP you can prioritise to integrate only the minimum necessary data so you can ensure that the solution brings value.

Early feedback from users may reveal that some datasets are unnecessary or that additional ones are needed.

With the MVP you can also control or clarify your data governance roles and responsibilities. Is the maintaining of integrated data, updating pipelines, and addressing of data errors functioning?

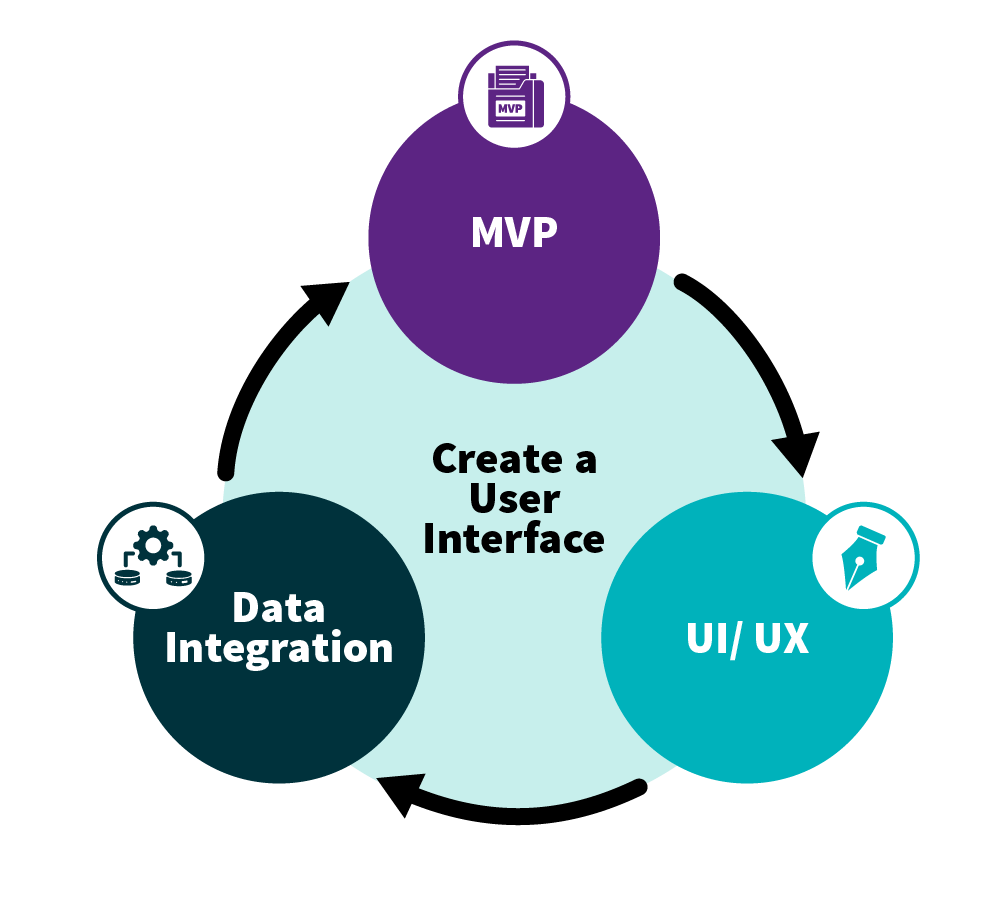

5. Create a user interface

It is important to have a user interface which embrace the corporate design from your organisation, as such it is easier for all employees and eventually citizen to identify themselves with the new tool and to use the interface.

For those responsible of the data integration it is crucial to know in advance how the integrated data will be visually displaced (type of dashboards, graphs, maps…) in order to structure it accordingly during the integration.

Since it’s an MVP, some data will be missing, delayed or still be incorrect. For the user experience it is important to plan what the user interface (your system) will display if it’s the case.

MVP, UI/UX and Data Integration are a connected trio and it is therefore important to see them as an iterative loop.

6. Check for cybersecurity & do a general testing of the MVP

It is now time to test with real users your MVP! It will help you to adjust data flows and visual elements accordingly. The feedback loops will also help to validate whether the data integration meets the expectations and needs of the first users.

You may also focus at this point on intuitive use of your solution: not just for data experts, but for non-technical users (e.g. municipal employees, citizens), your final target group. This maybe the good time to start preparing guides and info sessions.

Verify again compliance with data protection, cybersecurity, and public sector IT regulations. Your MVP after the feedback loop may differ from your original planning.

Here are some key thoughts on cybersecurity aspects during and after the MVP testing:

- Document how you comply with cybersecurity policies. This may be required for audits or future expansion.

- Use secure protocols for the data transfers from APIs, databases, sensors etc.

- APIs endpoints are protected against unauthorised access

- Access control and authentication, logging and monitoring are already in place for the MVP: you can track suspicious behaviour, alerts are sent when triggered by defined anomalies

- Ensure backup and recovery procedures mechanisms: what happens if there is a data loss?

- Vulnerability scanning and penetration testing: Perform automated vulnerability scans of your application and infrastructure.

Resources

1. D4A Best Practices

2. D4A Training Modules

3. "Go deeper" - Insights on a Method

- Design Thinking

4. Academic papers

In our D4A knowledge base you will find more on the topic of data openness, data management or data risks

Sources