Activities

1. Implement the service in a limited test location

Before rolling out a data solution or service on a large scale, it is essential to first test it in a limited controlled location. This pilot test allows for the identification of technical issues, user experience challenges, and potential data quality concerns. By starting small, teams can gather valuable feedback, refine and adjust the system, and make sure it aligns with all goals and operational requirements. A localised rollout also minimises risk, saves costs, and increases the likelihood of success when the solution is expanded across boarder regions or departments.

2. Prepare the infrastructure for further rollout

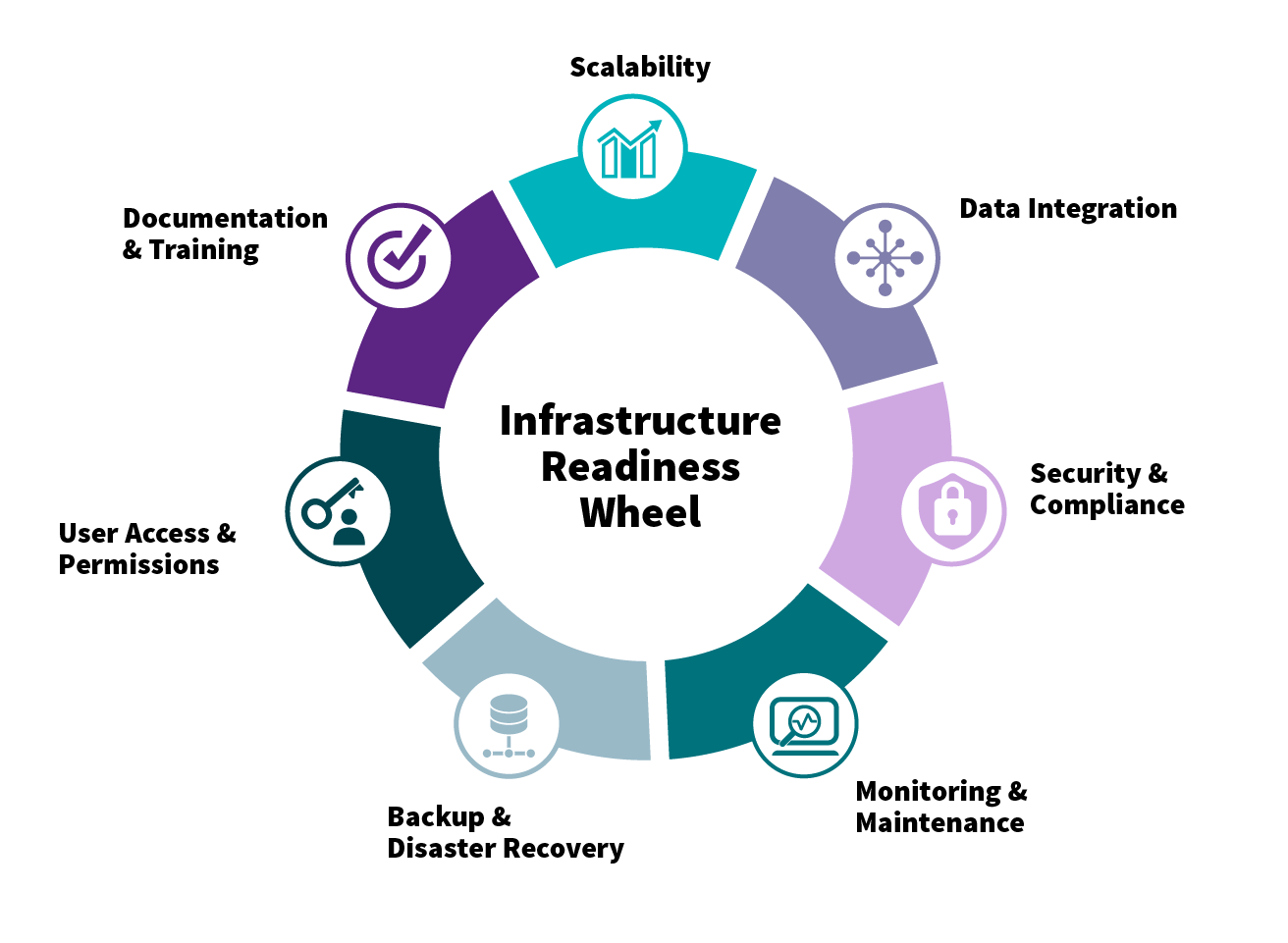

To prepare the data infrastructure for a broader rollout of a data solution or service, several key aspects must be addressed:

- Scalability

- Data integration

- Security and compliance

- Monitoring and maintenance

- Backup and disaster recovery

- User access and permissions

- Documentation and training

By addressing these areas, organisations can ensure that the data infrastructure is resilient, efficient, and ready to support a full-scale rollout.

3. Implement monitoring systems

Data monitoring makes sure that any data submitted is reliable and meets the beforehand created criteria. It keeps checking the data to verify that it adapts to the rules, even when the rules might be changed.

Implementing a robust monitoring is essential to keep the data solution running smoothly, catch issues, and ensure performance aligns with the expectations.

What to monitor:

- Data pipeline health: Track success/failure rates, processing times, data latency, and throughput.

- Data quality: Monitor for anomalies like missing values, schema changes, duplicates, or outdated data.

- Infrastructure metrics: CPU usage, memory consumption, network throughput.

- User behaviour and service logs: API usage patterns, user adoption rates, and error logs.

It is advised to set up automated alerts, if e.g. anomalies in KPIs or model outputs occur of if the performance is degraded. A great way to have a low-cost solution implemented for these tasks is to opt for an open-source-tool. Here are three suggestions to open-source monitoring tools:

- Prometheus https://github.com/prometheus/prometheus

- Apache Superset https://github.com/apache/superset

- Apache Airflow https://github.com/apache/airflow

4. Create participatory data

The first step is to classify your data. Ask yourself: Is the data safe to be shared with anyone (Open Data)? Does it require authentication or should the data be available with a limited access (restricted data)? Or is the data even confidential or sensitive in a way that it is not meant to be for public use? For this, a data classification policy should be defined, which clarifies how each dataset is handled.

Next, apply data anonymisation and aggregation. Remove or mask PII (e.g. Names, addresses, birthdates, GPS-level locations). Rather aggregate the data, e.g. show neighbourhoods instead of individual households. Use role-based access control (RBAC). If certain datasets need controlled access, allow role-specific access. This can be granted for researchers, certain departments etc. Public users mostly get limited views or summary statistics. Tools like CKAN, Socrata or Keycloak support access controls. Furthermore, secure the data infrastructure by using HTTPS for secure transmission, implementing rate limiting to prevent abuse, use logging and monitoring to detect unusual access patterns and regularly patch and update backend services.

5. Communicate and document data processing

Education is key to a safe usage of data. Data literacy programs should be run not only for citizens but for the specialists as well. These should provide guides and guidelines on how to use data responsibly by showcasing examples in different dimensions: Technical, juridical, ethical.

Another good way to showcase loophole or errors in your data system is to let citizens flag problematic or sensitive datasets. Involve communities in data governance and set up review boards.

Resources

D4A Best Practices

D4A Training Modules

- Kungsbacka: Introduction to Open Data and its Value

- University of Gothenburg: Open Data in the municipal context

- University of Gothenburg:Challenges of Open Data in the municipal context

- University of Gothenburg: Values of Open Data in the municipal context

- Intercommunale Leiedal: Data project management flow

Academic papers

"To go further" - Insights on a Method

Sources

Device Magic (2023) Running a Successful Pilot Program - Step-By-Step Guide. Available at: https://www.devicemagic.com/blog/step-by-step-guide-to-running-a-pilot-program/

Testsigma (2025) Pilot Testing in Software Testing. Available at: https://testsigma.com/blog/pilot-testing/

Urban Transformations (2024) From pilot to practice: navigating pre-requisites for up-scaling sustainable urban solutions. Available at: https://urbantransformations.biomedcentral.com/articles/10.1186/s42854-024-00063-5

Power Consulting (2025) Top IT Infrastructure Best Practices Everyone Needs to Follow. Available at: https://powerconsulting.com/blog/it-infrastructure-best-practices/

Microsoft (n.d.) Guidance and best practices - Azure Backup. Available at: https://learn.microsoft.com/en-us/azure/backup/guidance-best-practices

RudderStack (n.d.) Data pipeline monitoring: Tools and best practices. Available at: https://www.rudderstack.com/blog/data-pipeline-monitoring/

Monte Carlo (2025) Data Quality Monitoring Explained. Available at: https://www.montecarlodata.com/blog-data-quality-monitoring/

Airbyte (2025) Data Quality Monitoring: Key Metrics, Benefits & Techniques. Available at: https://airbyte.com/data-engineering-resources/data-quality-monitoring

Ada Lovelace Institute (n.d.) Participatory data governance. Available at: https://www.adalovelaceinstitute.org/project/participatory-data-governance/

The ODI (n.d.) Participatory data. Available at: https://theodi.org/insights/projects/participatory-data/

Atlan (2023) Data Governance vs Data Classification: 5 Key Differences. Available at: https://atlan.com/data-governance-data-classification/

Palo Alto Networks (n.d.) What Is Data Classification?. Available at: https://www.paloaltonetworks.com/cyberpedia/data-classification

DataCamp (2024) What is Data Literacy? A 2025 Guide for Data & Analytics Leaders. Available at: https://www.datacamp.com/blog/what-is-data-literacy-a-comprehensive-guide-for-organizations

DataQG (2025) Growing Data Governance Awareness. Available at: https://dataqg.com/articles/growing-data-governance-awareness/

Gartner (2024) Data Literacy: A Guide to Building a Data-Literate Organization. Available at: https://www.gartner.com/en/data-analytics/topics/data-literacy